With modern AI tools, developers can move quickly from idea to execution, especially in the early stages of development. Tasks that once took hours can now be done in minutes, as AI handles much of the groundwork automatically. That visible speed makes it tempting to believe that software development itself has become easier. However, faster development alone cannot sustain AI-generated code quality over time.

Getting the first release out the door is just the beginning. Most of a developer’s time is spent understanding and working with existing code rather than building new features from scratch. That code is often maintained by engineers who were not involved in the original implementation. Once that transition happens, speed is no longer the deciding factor. Long-term maintainability and engineering discipline start to define the outcome.

At this stage, maintaining strong AI-generated code quality becomes less about speed and more about structure and review. Clear structure, consistent standards, and careful decisions make changes safer and less disruptive. Without that foundation, even well-written code becomes harder to maintain as complexity increases. Maintaining stability over time still relies on deliberate engineering practices.

This article looks at how software development actually changed after AI-assisted tools became widely used, what improved immediately, and what did not. It explores what happens when AI-generated code changes hands, corrects common assumptions, and explains why developer skill remains the strongest factor in long-term software quality. It also shows how AI shifts effort earlier in the lifecycle without removing it.

The goal is not to question AI’s value, but to set realistic expectations about where speed helps and where engineering still does the heavy lifting.

How Software Development Looked Before and After AI-Assisted Tools

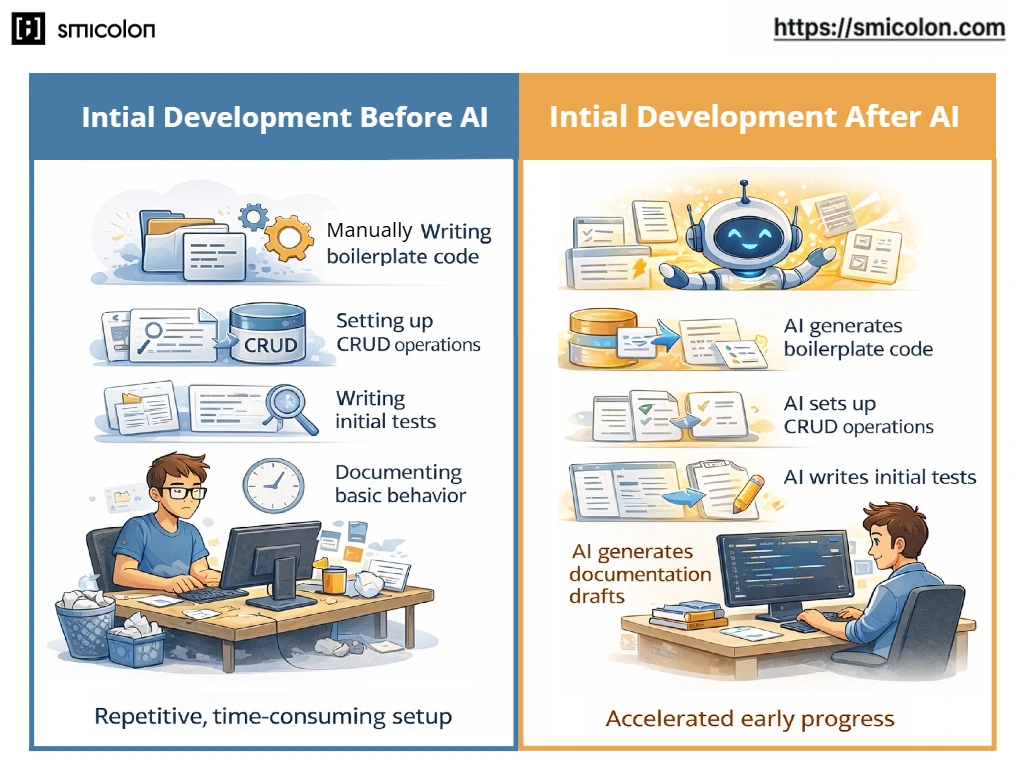

Before AI-assisted tools entered everyday development workflows, a large portion of a developer’s time was spent on predictable yet unavoidable tasks. Setting up a new feature meant writing boilerplate from scratch, wiring CRUD operations, drafting initial tests, and documenting basic behaviour. Progress happened, but it often felt slow at the start. Real problem-solving usually came only after hours or days of groundwork.

After AI-assisted tools became widely used, that early phase started to look very different. Tasks that follow common patterns, such as generating basic endpoints, form handling, unit test scaffolding, or documentation drafts, can now be produced almost instantly. Developers no longer need to start from a blank file for routine work. Instead, they begin with a rough first version and refine it.

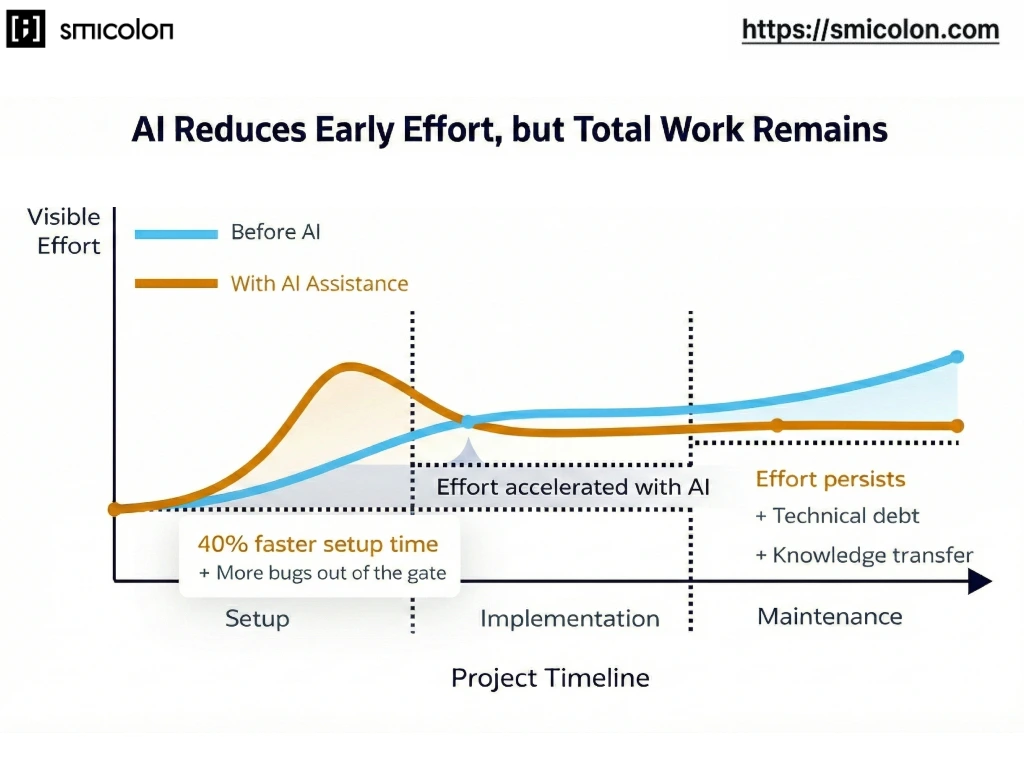

This shift has a noticeable impact on speed, especially early on. Many teams report that routine coding tasks take significantly less time with AI assistance, which allows them to reach a working version faster. That is why AI is often described as a productivity boost. It compresses the setup and repetition that used to dominate the beginning of a project. This pattern is also evident in Jellyfish’s article on the benefits of AI in software development, where AI shows its strongest impact on repetitive and early-stage work.

This difference is most visible when teams are building early versions or MVPs. Before AI, teams often spent weeks on setup before they could get anything usable in front of users. With AI-assisted tools, teams can assemble a first version much sooner, test ideas earlier, and iterate faster. That early momentum feels real, and it is.

But this does not mean the entire development process suddenly becomes faster. AI is strongest where patterns already exist. It helps most with repetition and first drafts, not with deciding what should be built or how a system should evolve. Developers still need to review what AI produces, adjust it to fit their codebase, and decide whether it actually makes sense.

In short, AI-assisted tools reshape the early stages of software development by reducing setup effort and speeding up initial progress. This makes it easier to get started and iterate sooner. How that speed holds up once software moves beyond its first version is a separate question.

What Happens to AI-Generated Code Quality When Code Changes Hands

In the software development lifecycle, the nature of the work shifts once AI-generated code changes hands. This usually happens when a different developer steps in to update or extend a feature that was originally built by another developer. In that situation, speed becomes less important, and understanding and changing the code becomes the priority.

This is the point where the limits of AI in software development become clear. AI can generate working code, but it doesn’t capture the reasoning behind those decisions. When that reasoning isn’t clear in the code, the next developer has to reconstruct it before making even small changes.

By the time software reaches this stage, speed is no longer the primary concern. The work shifts away from writing new code toward understanding existing behaviour, validating changes, and preventing regressions. Developers spend more time reading, reasoning, and testing than implementing. That effort does not shrink just because AI was used earlier.

Most maintenance effort is spent building understanding rather than producing new code. Developers need to reconstruct intent, trace data flow, and reason about existing behaviour before they can safely make changes. This work is unavoidable, regardless of whether the original code was written manually or generated with AI.

As systems grow, this effort increases. Dependencies multiply, side effects become harder to spot, and even small changes require broader context. At this point, maintainability is shaped by how readable and well-structured the system is, not by how quickly the first version was created.

Test coverage becomes especially important at this level. Reliable tests reduce uncertainty and give developers confidence when making changes. Without them, maintenance becomes slower and riskier, regardless of whether the code was written by a human or generated with AI.

Therefore, AI does not make software easier or harder to maintain on its own. What determines long-term maintainability is how systems are structured, reviewed, and tested over time. When that relationship between speed and structure is misunderstood, teams often assume that improvements in early development automatically translate into improvements across the entire lifecycle. These patterns often lead to common assumptions about what AI has actually changed in software development.

Myth vs Reality: What AI Really Changes in Software Development

This section breaks down the most common assumptions teams make about AI use cases in software development and contrasts them with how AI actually changes the work. Let’s look at the most common ones and separate perception from reality.

Myth: AI makes software development fast from start to finish.

Reality: AI speeds up specific parts of development, not the entire lifecycle.

This is probably the most common assumption teams make after adopting AI tools. AI can generate code and documentation quickly, which creates the impression that the entire development process has sped up. Research from McKinsey shows that developers using generative AI can write code 35–45% faster, refactor code 20–30% faster, and produce documentation more quickly during the early implementation stages. That visible speed often leads to the expectation that if writing code is faster, everything that follows should be faster too.

However, the same research makes an important distinction. These gains are task-specific and appear primarily at the start of development. Later work, such as understanding existing systems, reviewing changes, coordinating with other developers, and maintaining software, does not speed up in the same way. AI does not eliminate the effort required to understand and safely evolve existing code. The software development process still slows wherever judgment, coordination, and deep understanding are required.

Myth: If AI writes the code, maintenance effort will be lower.

Reality: AI does not reduce maintenance effort on its own; engineering decisions do.

This is another assumption that arises as teams use AI for longer periods. When AI can produce working code in seconds, it feels reasonable to expect that the same code will also be easier to maintain later. If less effort went into writing it, many teams assume less effort will be needed to understand and change it over time. This assumption often overlooks AI code quality concerns that surface over time.

In reality, maintenance effort still depends on human judgment rather than AI output. Despite widespread AI adoption, the Stack Overflow Developer Survey 2023 shows that many developers do not fully trust AI-generated code without reviewing it carefully. GitHub’s “Speed is nothing without control” article also points out that while AI accelerates code production, long-term code health relies on clear engineering decisions and guardrails rather than speed alone. Together, these insights highlight that AI can speed up writing code, but it does not reduce the effort required to maintain it responsibly.

Myth: Using AI reduces the need for experienced developers.

Reality: AI makes developer skills more visible, not less important.

This assumption often comes from watching AI handle tasks that once required senior input, such as generating code, suggesting fixes, or drafting tests. When AI can produce solutions quickly, it’s easy to assume that experience matters less and that junior developers can now deliver the same results with the right tools.

In practice, experience becomes more important as responsibility shifts from execution to judgment. METR findings reported by InfoQ show that AI shifts effort toward review and judgment, reinforcing the importance of experienced developers. As AI takes on more execution work, experienced developers become responsible for evaluating whether the generated code fits the system, handles edge cases correctly, and can be safely maintained over time. Rather than replacing senior expertise, AI makes the impact of those decisions more visible across the system.